Debate

Large Language Models for Plasma Research - Curse or Blessing?

Large language models (LLM) such as ChatGPT and others may change the way we do research. These systems serve as a tool for literature searches, data analysis and performing programming tasks. But what are the potentials of LLMs and their shortcomings, especially regarding the very interdisciplinary plasma research?

The advent of large language models (LLM) such as ChatGPT and others will change the way we gather, process and present scientific findings. LLMs are based on collecting information from the internet and composing answers based on the likelihood of succession of words. Thereby, the most common statements and phrases are amplified, hoping these common information pieces are correct. This might, however, not necessarily be true, and the amplification of wrong statements may distort any thorough assessment and analysis of a topic. This is referred to as the hallucination of LLMs [1]. The ease of gathering information and creating answers in a very readable form is a blessing; amplifying wrong statements is a curse. Some publishers have already discussed this in the community and are addressing it [1-5]. But what are the potentials of LLMs and their shortcomings, especially regarding plasma research?

LLMs exhibit several advantages. (i) LLMs will change how we gather information because AI will condense information and filter out the most relevant information from the internet very efficiently, being judged by its popularity. This is very helpful for many well-defined tasks. These LLMs will presumably replace the current search engine approach for information gathering. Since plasma research is very interdisciplinary and the information is dispersed over many journals and conferences, using automated literature searches by LLMs can help. (ii) LLMs may accelerate data analysis by providing analysis concepts for filtering and fitting data with the most up-to-date algorithms. (iii) Simple programming tasks can be completed very quickly without the need to collect many different code snippets from the Internet. Usually, one or two attempts with an LLM are enough to have at least a starting version of a program if the task is not too elaborate. (iv) LLMs can generate at least a starting point for further research for most scientific topics. This is not too different from conventional research based on libraries, journal editions, or simply using a search engine. Using LLMs is much more comfortable. (v) LLMs help write scientific texts and produce highly readable output. This is their core expertise.

However, LLMs also exhibit challenges and shortcomings in doing plasma research. Doing a literature search and collecting information on a topic on the web by the LLM will not account for any accumulation or repetition of wrong statements and findings. In some cases, references given by LLMs to support arguments are wrong. The answers of an LLM may also depend on the person who is asking which will generate different outcomes for a specific scientific question. If one asks an LLM to explain very known facts, it could be correct, but it may also oversimplify things. In some cases, the explanations of LLMs are plainly incorrect. This hallucination of LLMs can be an obstacle, especially in plasma research, where the community is interdisciplinary and somewhat smaller compared to other fields. Consequently, only a few papers usually deal with a particular process or application, so the knowledge base for a specific topic is small and the statements these LLMs produce may not be robust. In that sense, the duty of any scientist to use several sources such as journal articles, textbooks, or discussions with colleagues before assessing any information is still the most important.

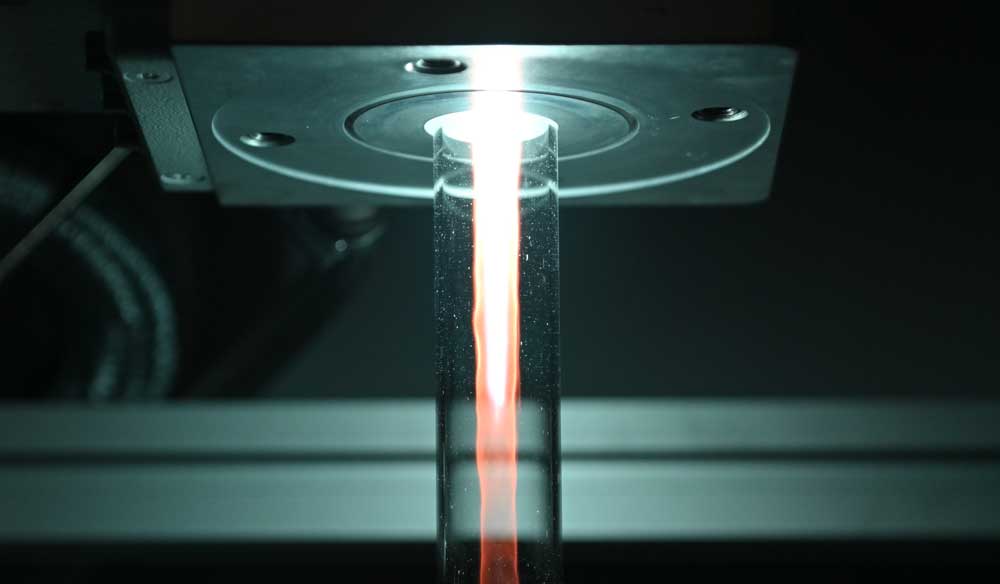

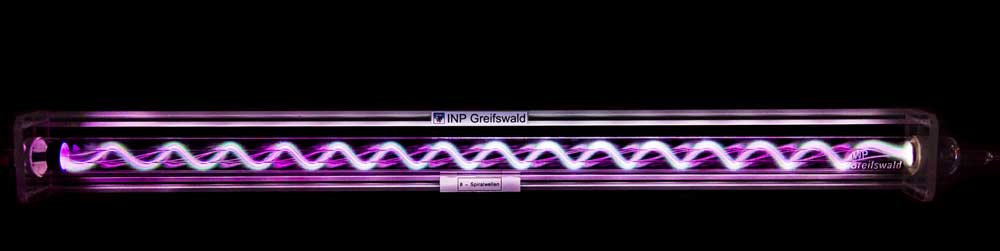

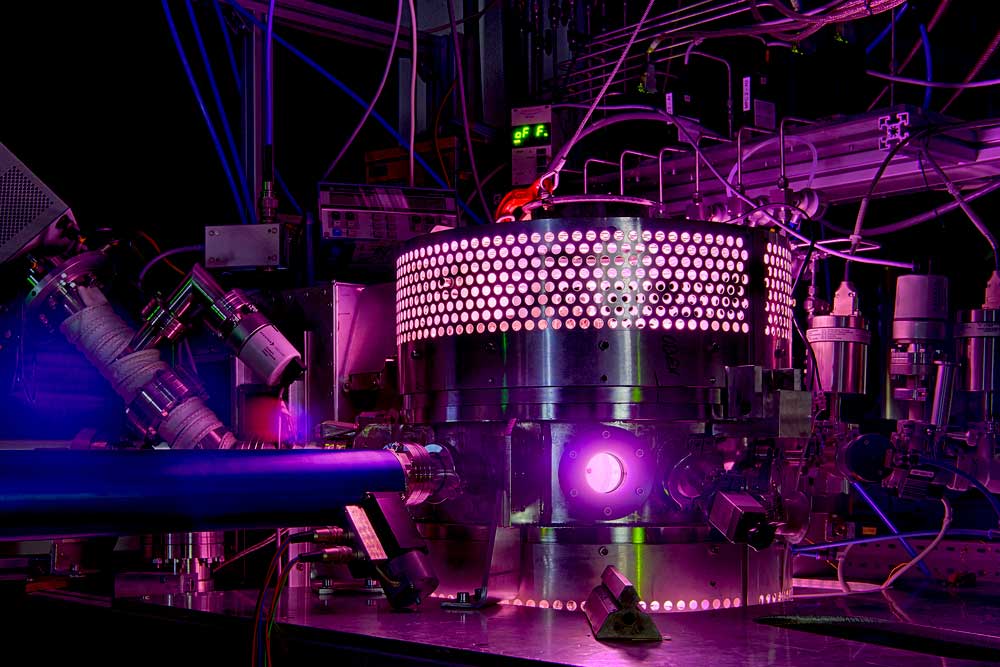

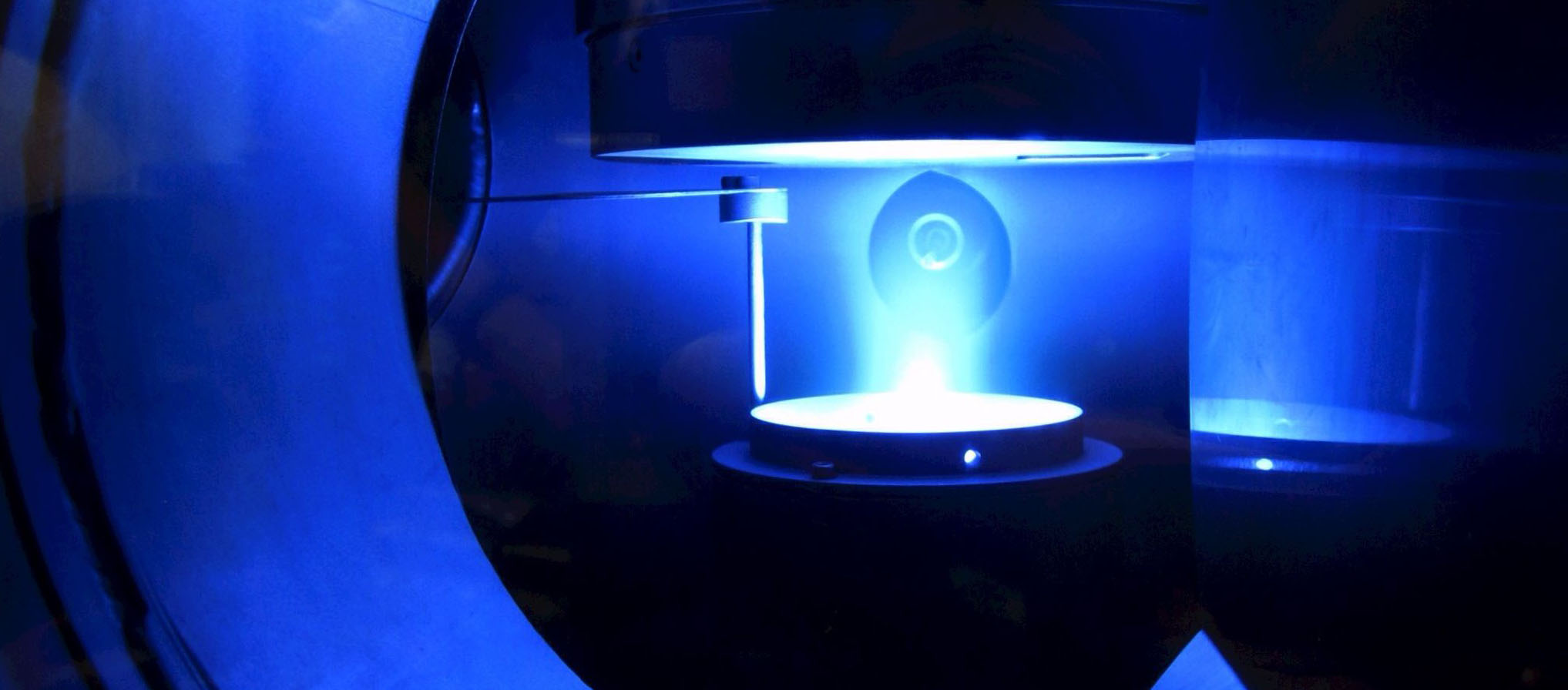

At the core of research is understanding fundamental principles and generating new insights or hypotheses. It is unclear whether LLMs can help here. For example, when comparing two plasma sources for a specific task, the LLM may recommend different combinations or operation parameters. However, a deep insight into the mechanisms may conclude that an entirely different discharge concept might be better suited. Commonly, statements are generated that one plasma method (such as DBD plasmas) is better than others (for example, microwave) or that “plasma” does not “work” for a particular application. The complexity of the addressed systems in plasma research is so significant that these simple answers are useless if they are not put into proper context. Since plasma research is also performed by scientists with training in other fields (such as chemical engineering, solid-state physics, etc.), these too-simple statements might not be questioned.

Finally, LLMs pose challenges for scientific journals. If one asks an LLM to explain a result or recommend a study, it necessarily collects information already published elsewhere. If the authors repeat that, they automatically repeat the research of others. This poses a challenge since LLMs allow us to quickly produce “nice” papers that only reproduce known information. This will blow up the number of published papers and dilute the “real” information going forward.

At present, there is a tendency to make it obligatory for transparency reasons to inform the reader that AI is being used to generate a manuscript or in the interpretation of a result (the authors, however, still bear full responsibility for the paper [3]). This may not be conclusive since using standard data visualisation or analysis tools and spelling software is already common without the need to be referenced. LLMs could be just another tool on that list since verification by the scientist will always be required.

In the end, it will be interesting to see how the scientific community integrates LLMs into the daily workflow. This transition is happening, but the input and guidance of critical scientists will always be necessary.

[1] ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope, Partha Pratim Ray, Internet of Things and Cyber-Physical Systems, 3, 121-154, (2023)

[2] ChatGPT: five priorities for research Eva A. M. van Dis, Johan Bollen, Robert van Rooij, Willem Zuidema & Claudi L. Bockting, Nature 614, 224 (2023)

[3] ChatGPT is fun, but not an author, H. HOLDEN THORP Authors Info & Affiliations, SCIENCE 379, 313 (2023)

[4] Exploring the potential of using ChatGPT in physics education, Yicong Liang, Di Zou, Haoran Xie & Fu Lee Wang, Smart Learning Environments 10, 52 (2023)

[5] After enabling AI it is time for the plasma community to benefit from AI – Personal view in a short perspective article, E. Kessels, Atomic Limits Blog, PMP Group TU Eindhoven, 7.5.2023